Introduction In the realm of smart agents, prompts function as...

Read MoreWith the widespread popularity that virtual games (and soon augmented reality gaming) are gaining from gamers all over the world, you’re no doubt wondering what the big deal is with VR. Virtual reality is exactly what the name says it is: applications that create a vivid and immersive imaginary reality for the users.

The easiest example of VR is in amazing game creation. With the help of a small pair of black glasses, you can be transported to an area located hundred feet above the ground, and then get the vividly frightening experience of walking a narrow plank (without dying of course). Amazing experiences like walking on the fields of Jurassic Park among dinosaurs or among skyscrapers can be experienced through this rising technology.

Augmented reality (AR) and mixed reality (MR) are the two common relatives of VR. AR applications augment layers of virtual elements into the reality and MR applications mix and match virtual elements with the reality. VR, AR and MR applications try to penetrate most aspects of our lives: gaming, sports & fitness, industry, automotive, healthcare… some with greater success than others.

The main challenges are to create new, exciting and valuable experiences or enhance existing experiences, without damaging usability or hindering the regular methods humans perceive and interact with their environment.

Legacy applications were based mainly on stationary visual experiences using headsets and gaming interfaces. Modern applications try to increase immersion and intensify user experience by allowing for more freedom of motion, stimulus of all the senses, and greater interaction with the physical world.

A legacy application of a virtual squash game is very similar to a PC game – stationary experience, augmented by a VR headset and a play station like controller. A modern application tries to accurately reproduce the sensation of a real game by simulating motion, sound and feel. It allows the user to roam around, using a smart bat controller to hit a virtual ball and receive feedback.

How are modern VR system built?

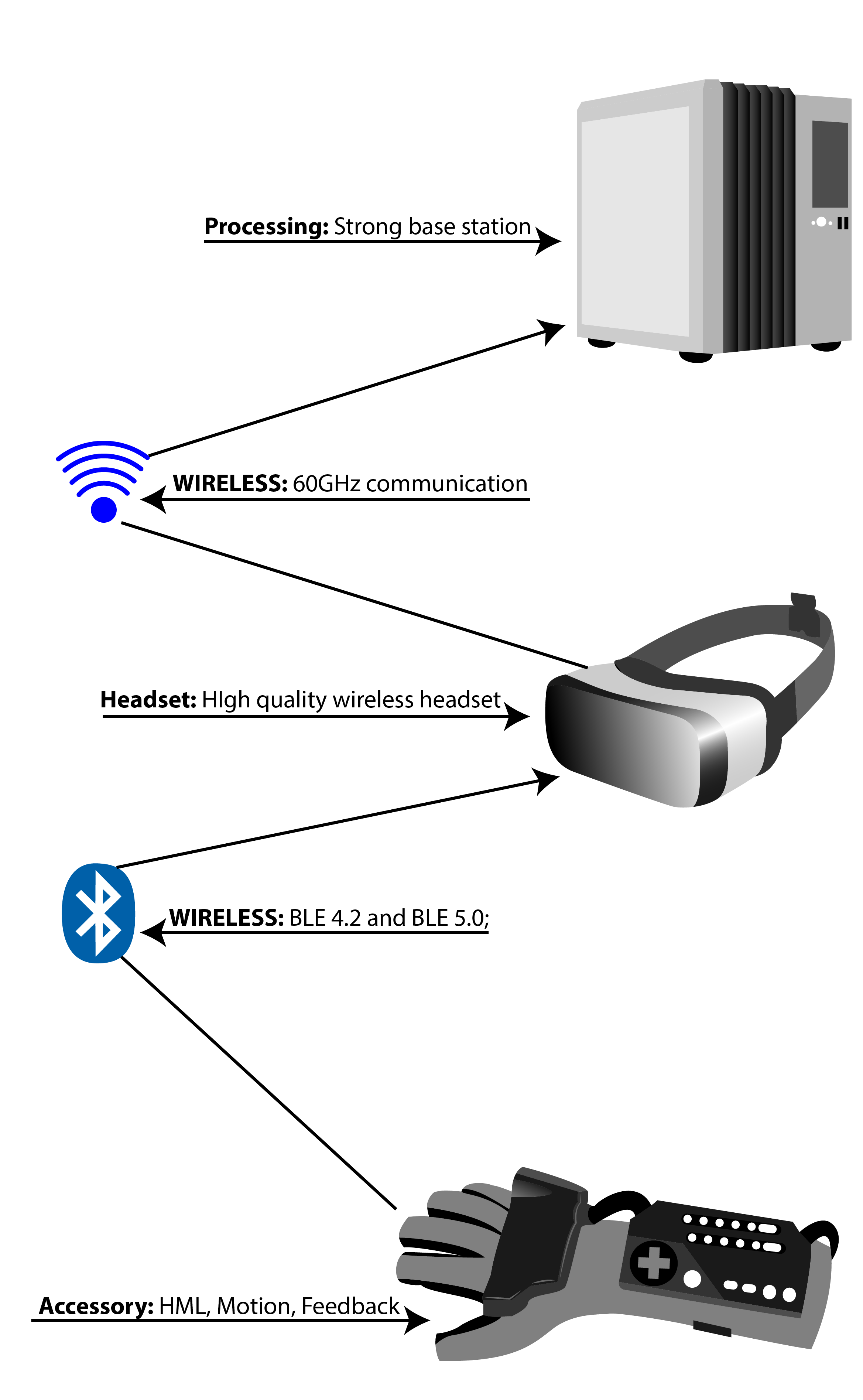

State-of-the-art VR systems are based on the following main elements:

- High quality wireless headset, with orientation and position tracking capabilities (e.g. HTC Vive, Oculus rift, Hololens, etc). Position and orientation tracking is achieved by a combination of motion sensors, triangulation techniques (e.g. HTC Vive lighthouse IR tracker which achieves sub-millimeter precision), computer vision, Lidar and other exotic technologies.

- Smart accessories, controllers and wearable devices (e.g. Smart bat, Smart wheel, Smart apparel, Smart toothbrush, etc). A good smart controller contains sensors and human machine interfaces to link the user and the visual experience. It also has motion sensors to sense orientation, vibration, touch and sound sensors to provide feedback, and finally buttons, knots and other inputs to control the visual experience.

- Wireless connectivity between the element controllers, headset, and base station to allow data exchange. BLE (Bluetooth 4.0 and 5.0) is the main protocol used to connect accessories and headset (though other methods are also used – HTC Vive has developed its own protocol) . Wi-Fi and Wi-Gig (Intel is collaborating with Google to embed WiGig technology in the Oculus-Rift device for wireless transmission of high-quality video and audio) are the main protocols to stream media between the wireless headset and the base-station.

- High quality parallel processing unit (“base-station”) to perform the intensive graphical calculations. Such a processor is sometimes headset internal (such as the cell phone in Samsung GearVR and the dedicated processing units in Hololens) and many times external (the remote PC in a wireless HTC Vive and the wireless Oculus Rift).

CHALLENGES

There are many challenges that a user must overcome when developing such an advanced system. Below we have addressed two of the main challenges that relate to the use of smart accessories:

- Tracking: Most if not all controllers use IMUs (Inertial Motion Units) to track motion. Those amazing low-cost devices are based on MEMs technology and contain accelerometers, gyroscopes, compasses and barometers. Using sophisticated algorithms and sensor fusion techniques they measure acceleration and orientation (Euler angles, Quaternion) with decent accuracy and low drift.

However, those devices are not suitable for measuring 3D position without applying heavy, case basis, heuristics, and using other means.

3D position and motion are calculated using triangulation techniques (IR, Magnetic fields, Laser, ultrasonic), computer vision techniques (stereoscopic cameras; depth cameras), Lidar, and other technologies. However, those techniques are expensive since they require high computation resources and extensive infrastructure, and do not always work efficiently. 3D positioning, which requires simple infrastructure, remains a holy-grail of VR/AR and MR applications.

- Synchronization – Accessories, headset and visual application… all of these must operate in a synchronized manner. For example, when the user dodges an obstacle, moves the controller, presses a button or rotates their head, the visual experience must follow precisely, update in real time and (in many cases) send a feedback to the accessories.

Real-time means less than 10 to 20ms! When this requirement isn’t met the user may feel uncomfortable, disoriented and dizzy.

Meeting this challenge isn’t a small fit due to many technical challenges including sampling rates, multiple layers of communication, and extensive computation. With time, the technology of VR, AR, and MR are all expected to advance even further in the realm of immersive user experience and change the world we see forever.

More Posts

Unleashing the Future: Crafting Your Very Own Smart Agents

Introduction As we witness the rapid evolution of artificial intelligence...

Read MoreMy first commandment for product manager – Product-Market-Fit

My first commandment for product manager Product-Market-Fit Last Updated: 16...

Read More